Windowed total variation denoising and noise variance monitoring

Abstract

We proposed a real time Total-Variation denosing method with an automatic choice of hyper-parameter , and the good performance of this method provides a large application field. In this article, we adapt the developed method to the non stationary signal in using the sliding window, and propose a noise variance monitoring method. The simulated results show that our proposition follows well the variation of noise variance.

I Introduction

The signal collected by the sensor can be modeled as : a random noise is added into the useful physical quantity with and .

We aim to recover the unknown vector from the noisy sample vector with the sample at time by minimizing the Total Variation (TV) restoration functional:

| (1) |

with the sampling period vector where for and . The restored signal is given by:

| (2) |

Total Variation based restoration method is first proposed in [1]. The authors in [2] show an one-to-one correspondence between the noise’s variance and under the hypothesis of constant .

Motivated by the local influence of new sample to the actual restoration, we propose a new online TV-restoration algorithm with an automatic choice of for a stationary 1D signal in [3] following the work of [4] and [5]. The simulations show that our proposition of has a similar performance as the existing methods (SURE [6] and cross-validation). Further more, our method is appropriate for real time applications, especially for monitoring a huge amount of sensors in the plants.

In this paper, we adapt our online TV restoration to non-stationary signals and propose a noise variance monitoring method. The key idea is to use our method on sliding windows, assuming a local-stationary hypothesis for the signal. This hypothesis is strong but commonly used already (e.g. Fourier analysis, wavelet transforms). In order to apply our method to non-stationary signals, two problems are to be solved:

-

•

Adaptation of the online method to the sliding window

-

•

Choice of the length of window (noted )

The first point ensures the efficiency of the real time estimation, while the second point guarantees the good performance of method. Indeed, the choice of needs to get a compromise between the local stationarity assumption and the performance of the restoration : the local influence of new sample vanishes inside a large window, but the local stationarity may not be respected. In this article, we present how we deal with the first point by supposing the influence of new sample stays inside the actual window. The choice of window size is the immediate perspective of this work.

The increasing of the ground noise is one of the index for the failures of the sensors or the production lines. This motivates us to build a real time application for detecting the variance behaviour by tracking the windowed restoration residuals based on the automatic determination of hyper-parameter .

Here some approaches for the estimation of noise variance are listed:

- •

-

•

Median absolute deviation (MAD) proposed by [12] is a heuristic method largely used in many applications, especially for the choice of threshold for wavelet restoration. can be estimated by the median absolute deviation of the finest scale of wavelet coefficients of signal.

With respect to the previous cited method, our approach, based on the separation of signal and noise, proposes a procedure for simultaneously signal denoising and variance tracking, while being implementable as an online application. Thanks to its low time and space complexity, our proposition is well fitted to the cases with limited computation resource.

The paper continues as it follows: in Section II we will at first present our TV-based denosing method with an automatic choice of parameter. Then, in Section III we adapt our online implementation to the sliding window. After that, in Section IV, we will present an application of our algorithms: noise variance monitoring, and compare with an existing method. Finally, conclusions and perspectives are depicted.

II Automatic total variation denoising method

In this section, we will present the automatic TV-denoising method proposed in [3]. We aim to recover the unknown vector from the noisy samples by minimizing (1). The restored signal is given by (2).

Since we work on a finite sample of signal, the solution can be seen as piece-wise constant. We use the segment representation [5] for the constant pieces: a set of index of consecutive points whose restored value is called a segment if it can not be enlarged, which means if (or ) and (or ). The segments number of is noted as . The following notations are introduced for the segment:

-

•

Index set with , containing the point inside the segment.

-

•

Segment level

An equivalent representation of is provided by the couple with the set of segment levels and the cutting set . By knowing the cutting set , let with for , for and , the level of segment is given by:

| (3) |

with , the segment length and the mean value inside segment .

A different value of may provide a solution with distinct cutting set: gives , while implies a parsimonious solution . The authors in [4] and [5] show there exists a sequence such that for every , two segments are merged together by moving to with and . In [3], we propose a rapid algorithm to estimate the dynamic of the restoration in function of , and the dynamic is saved in with the value of for which the points and are merged into the same segment. allows the computation of the cutting set and also the restoration (3) for every .

Based on the variation of the extremums number (noted ) of the restoration in function of , an adaptive choice of hyper-parameter is proposed. The simulations show that our estimation has a similar performance as the state of the art, all near the optimal choice with the original signal. The variation for every with and can be estimated simultaneously as the estimation of .

Besides, we analysed the local influence for by introducing a new sample at the end of the sequence: only a small part of will be changed. Based on this local property, we proposed an online algorithm (c.f. Algorithm 2 in [3]) for updating the changed part of , which is more efficient than the offline estimation. The locality of the TV-denoising method motives the adaption of sliding windows in order to deal with the non-stationary signal by supposing the local stationarity inside the window.

III Adaptation to sliding windows

In this section, we will present some theoretical elements about the local behaviour of the restoration and adapt the online algorithm to the sliding window.

Let’s consider two successive sliding windows and . is indeed in which we take off the first point and add a new point . For the sake of simplicity, is noted for the window .

We note the restoration of the window with a given and for . For the simplify of the presentation, the elements concerning about will be noted with the symbol , and that for will be noted with the hat symbol. For example, with a given , the segment length set of is with , and that of is with .

III-A Influence of slide movement

The update of the restored signal and need to consider the sliding of index from to : a restored signal point is unchanged under the influence of slide movement means . In [3], we have shown the following theorem:

Theorem 1.

If there exists an index such that , then the new restoration satisfies for all .

The diffusion from the “new” sample changes only the last part of the restoration of up to the junction of two segments whose sign of the variation corresponds to that of . We can establish a similar theorem (c.f. Theorem 2) about the influence of the first sample for the sliding window : the removing of changes only the first part of the restoration.

Theorem 2.

If there exists an index such that , then the new restoration satisfies for all .

To sum up, for updating to , only the first part and the last part of are changed. We introduce the following definitions:

Definition 1.

With and where is the last segment which satisfies and is the first segment which satisfies ,

-

•

is called non right-isolated sequence.

-

•

is called non left-isolated sequence.

-

•

is called isolated sequence.

III-B Independence between segments

For a given (noted ), the cutting set is given by . In [3], we showed the points inside a segment of are merged for , and the value of provoking the merge is independent to the points outside the segment. By introducing the virtual segment (c.f. Definition 2), the estimation of can be broken into some sub-problems for each virtual segment, shown in proposition 1. Combining with Theorem 1 and 2, the elements of inside the isolated sequence are not influenced by the sliding movement.

Definition 2.

Let and , we have , , and . For each segment with , let , we introduce the virtual segment where:

-

•

, for and .

-

•

, for and .

Proposition 1.

Let the estimation with all the samples , the estimation with virtual segment , and for , we have .

III-C Proposition of algorithms

Let , we note and , two vectors of size , the result of . The results after sliding ( and ) based on can be obtained by updating and . In this section, we will propose an adaptation of the online algorithm proposed in [3] to the sliding windows.

We will only talk about the online estimation of from in detail. By following, we note an application of Algorithm 1 in [3] to a given sequence of and as .

After choosing , called the cutting point, the restoration for is . We note and respectively the last point and the last segment of the non left-isolated sequence of , and respectively the first point and the first segment of the non right-isolated sequence of . can be splitted into four parts: (1) Some elements of are changed following Proposition 1; (2) The non left-isolated sequence for is influenced by the removal of the first point ; (3) The non right-isolated sequence for is influenced by the new point ; (4) All remains the same for .

We treat at first the unchanged part of : due to the sliding, we have .

For the non right-isolated sequence, let , can be estimated by with the virtual segment:

-

•

-

•

For the non left-isolated sequence, can be estimated by with the virtual segment:

-

•

-

•

The isolated and non-isolated sequences can be assembled in with for , for and for .

It remains . Let containing indeed all the last points of ’s segments, we can get .

Finally, can be assembled in the following way:

| (4) |

with giving the index of in the vector .

The algorithm adapted for the sliding window is gathered in Algorithm 1. With a nice choice of , only a small part () of the window needs to be updated from . The overall complexity for estimating the restoration in a window of size is in .

IV Performance analysis

IV-A Application: noise variance monitoring

We propose a method to detect the shift of noise’s variance based on our restoration method. For an observed signal of size , we take a sliding window of size with . For each window , we apply our denoising algorithm with the automatic choice of on the sequence and for estimating the restored signal . The windowed restoration residual for is given by . The variance of the residual inside can be estimated by:

| (5) |

with the mean value of residual .

For the simulation, the realisation of noise is with the original signal and . The estimation of noise variance inside is given by with .

Since is a good restoration of , is similar to , which means that is close to inside each window . The noise variance is not available for the real data, so we propose to detect the variance shift in using the residual standard deviation vector with obtained by (5) inside .

For estimating the noise variance in a window of size , the complexity is in with the online implementation of sliding windows, and the overall complexity for monitoring the variance of a signal of size is in .

IV-B Results

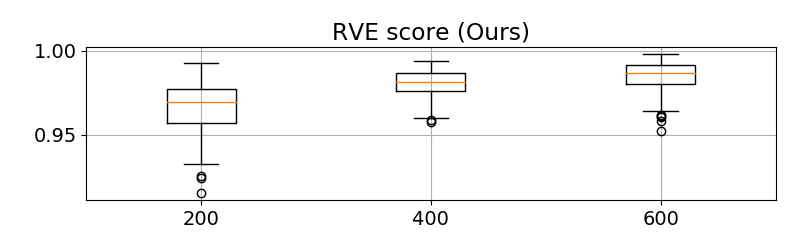

For the variance monitoring, the method needs to estimate accurately the noise variance in the ideal case or capture the variation of noise variance in a more realistic case. We use the following criteria to evaluate the performance of variance monitoring:

-

•

Average bias: .

-

•

Ratio of variation explained:

(6) where . It is indeed the score between and after adjusting those two items to the same mean value. The range of RVE is between and , and indicates our estimation explains perfectly the variation of .

We compare our method with Median Absolute Deviation (MAD) following the proposition of [12] and [13] with .

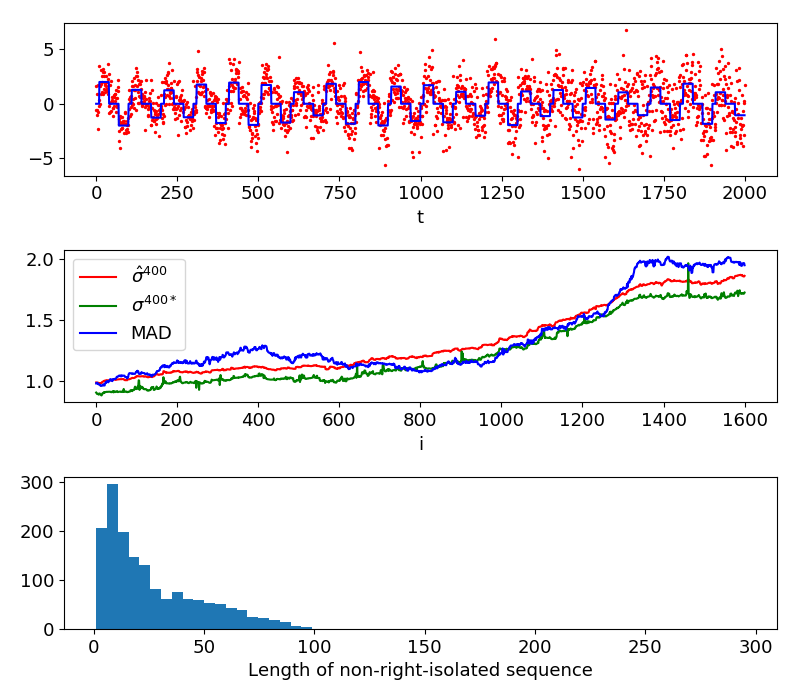

At first, we will fix the window length . Our proposition requires a parameter which stands for the length of the approximation of derivative, see p13 in [3] for more details. We apply our method to a simulated piece-wise constant signal with the parameter . An example of with and the noise variance estimations for 400-point windows and are shown in Figure 1. Both MAD and our method propose an estimation similar to the variance of the noise realisation for every sliding windows. In this example, our method underestimates the variance, but the variation of our estimation inside each sliding window follows well that of . The estimation of MAD propose a precise estimation, but does not follow the variation of . The lengths of non-right-isolated sequence of each window (shown in Figure 1) are all smaller than the window size , which means the influence of new sample remains inside each window and validates our assumption about the local influence.

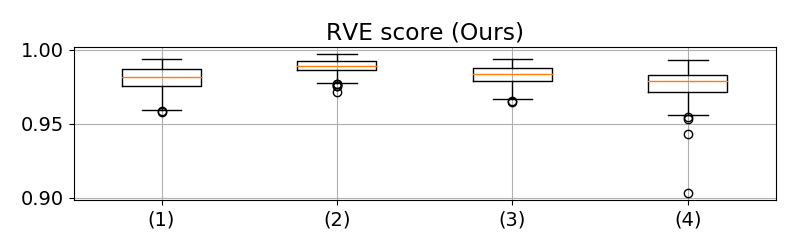

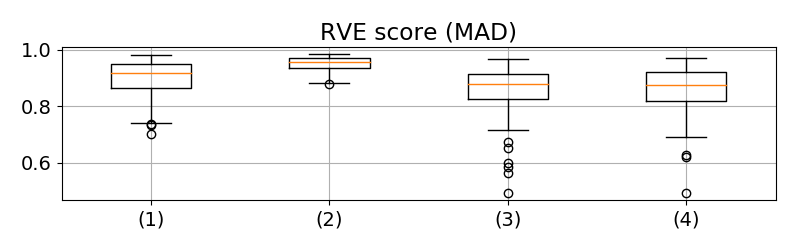

We tested the performance of MAD and our approach under different noise hypothesis:

-

1.

with .

-

2.

with for and for .

-

3.

with .

-

4.

.

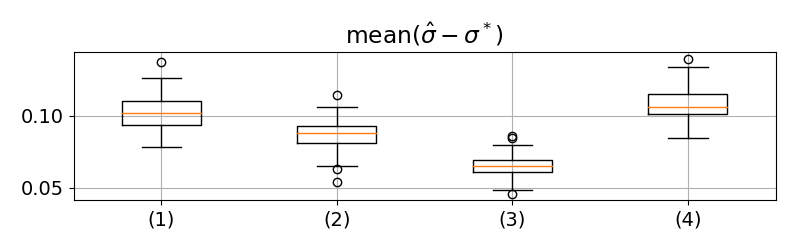

We have done 100 simulations for each type of noise, and the results are gathered in Figure 2. For the most of simulations, our method has and over-performs MAD. The high RVE score indicates the variation of our proposition fits well that of the real values, which allows us to monitor the variation of noise variance for the real signal collected from plants. However, the result of shows our method always underestimates the noise variance, and the bias depends on the type of noise, which means we can not estimate an universal offset for removing this bias in a general case.

The windows length plays an important role in the restoration and the variance monitoring, and needs to be chosen carefully. A short window can capture the local variation of the noise variance, but the new point (i.e point) may still have a strong influence for the pass (i.e. ), which limits the restoration performance for the pass points. We test with the noise , and the results are shown in Figure 3. A longer window provides a better performance of monitoring. But the local variation of will be neglected for a long window, and the stationarity hypothesis inside the window is not validated anymore. The choice of is out of the scope of this article, and it remains an open question.

To sum up, our method can not provide a precise estimation of the noise’s variance values, but our estimation follows well that of the real value with an estimation error depending on the type of noise and the shape of original signal.

One of the applications of our method is to detect the shift of noise variance. The residual variance may stay stable (i.e ) during the correct functional period, and the noise variance shift can be detected by a prefixed threshold over comparing to the stable regime: e.g for some successive sliding windows .

V Conclusion

In this article, we adapt the TV denoising method proposed in [3] to sliding windows based on the local property of the TV-denoising signal. We believe our adaptive choice of works also for the non-stationary signal. We applied the adaption to some signals with time varying noise variance. The simulated results shows that the variance of the restoration residuals follows well that of the noise, and this method can be used to monitor the ground noise variance in real time with a limited computation resource. However, the performance of these methods (both restoration and variance monitoring) depends on the choice of window length which is our on-going research interest. The application to the real data is also one of on-going works.

Acknowledgment

This work has been supported by the EIPHI Graduate School (ANR-17-EURE-0002).

References

- [1] L. I. Rudin, S. Osher, and E. Fatemi, “Nonlinear total variation based noise removal algorithms,” Physica D: nonlinear phenomena, 1992.

- [2] A. Chambolle and P.-L. Lions, “Image recovery via total variation minimization and related problems,” Numerische Mathematik, 1997.

- [3] Z. Liu, M. Perrodin, T. Chambrion, and R. Stoica, “Revisit 1d total variation restoration problem with new real-time algorithms for signal and hyper-parameter estimations,” arXiv preprint arXiv:2012.09481, 2020.

- [4] R. J. Tibshirani and J. Taylor, “The solution path of the generalized lasso,” The Annals of Statistics, 2011.

- [5] I. Pollak, A. S. Willsky, and Y. Huang, “Nonlinear evolution equations as fast and exact solvers of estimation problems,” IEEE Trans. on Signal Processing, 2005.

- [6] C. M. Stein, “Estimation of the mean of a multivariate normal distribution,” The annals of Statistics, 1981.

- [7] D.-H. Shin, R.-H. Park, S. Yang, and J.-H. Jung, “Block-based noise estimation using adaptive gaussian filtering,” IEEE Transactions on Consumer Electronics, 2005.

- [8] S. Beheshti and M. A. Dahleh, “A new information-theoretic approach to signal denoising and best basis selection,” IEEE Transactions on Signal Processing, 2005.

- [9] M. Hashemi and S. Beheshti, “Adaptive noise variance estimation in bayesshrink,” IEEE Signal Processing Letters, 2010.

- [10] J. Han and L. Xu, “A new method for variance estimation of white noise corrupting a signal,” in I2MTC, 2006.

- [11] S. Pyatykh, J. Hesser, and L. Zheng, “Image noise level estimation by principal component analysis,” IEEE transactions on image processing, 2012.

- [12] D. L. Donoho and J. M. Johnstone, “Ideal spatial adaptation by wavelet shrinkage,” biometrika, 1994.

- [13] S. Sardy and H. Monajemi, “Efficient threshold selection for multivariate total variation denoising,” Journal of Computational and Graphical Statistics, 2019.